Periklis Thivaios of IE Business School (and formerly part the AMF team at Wits) speaking at Risk Minds International 2015 in Amsterdam on how banks can manage the risks from the Fintech industry. He will be hosting a boardroom risk discussion at RiskMinds International on Thursday 10th December at 10.20 posing the question, ‘how afraid should we be of Google, Apple and Facebook and what can banks do about it?

http://www.riskmindslive.com/periklis-thivaois-on-if-banks-should-fear-google/

Wednesday, December 16, 2015

Monday, November 30, 2015

AMF 2015 Project Presentations 23 and 24 November

Congratulations to the AMF BSc(hons) class of 2015 for a wonderful 2 days of market-microstructure and computational finance final project presentations!

Thursday, November 5, 2015

Sunday, October 18, 2015

Deep jargon in deepmind thinking

Deepmind progress: "The DeepMinders found when using options, “a) The hi-tech bra that helps you beat breast X; b) Could Saccharin help beat X ?; c) Can fish oils help fight prostate X ?,” the model could easily predict that X = cancer, “regardless of the contents of the context document, simply because this is a very frequently cured entity in the Daily Mail corpus,” the paper stated."

http://www.techworld.com/big-data/why-does-google-need-deep-neural-network-deep-learning-3623340/

Interest in deep learning increased dramatically when Google bought UK-based DeepMind for £400m at the end of last year. Techworld tells you everything you need to know about artificial intelligence.

Scientists have fed an artificially intelligent system with Daily Mail articles so it can learn how natural language works. While it’s not quite HAL 9000, it’s a worrying thought for any left wing tecchies.

This process, called deep learning, is old news. Researchers have been attempting to train algorithms since the 1970s but computational and data limitations slowed progress in the 1980s.

Now deep learning is enjoying a renaissance. Interest in the field hit a peak when Google paid £400 million for UK-based deep learning research group, DeepMind at the end of last year.

Often coined machine learning or neural networking, deep learning involves “training” a computational model so it can decipher natural language. The model relates terms and words to infer meaning once it is fed information. It’s then quizzed on this information and “learns” from the experience - like a child learning to communicate.

With improved computational power and an overwhelming availability of data, researchers have picked up deep learning theories once more, to begin the path to artificial intelligence.

Why the Daily Mail?

Machines have already previously been taught how to read documents and can answer questions posed on its content, but its knowledge base was limited by the size of the document. With heaps of material for algorithms to consume online, systems can make use of a larger pool of natural language, granting it a deeper understanding of universal topics.

Two months ago, Google’s DeepMind revealed a novel technique for perfecting this previously tricky task using online news.

Researchers input one million Daily Mail and CNN articles to the system to query it on and found the algorithm could correctly detect missing words or predict a headline. It's worth noting that the choice was based on the MailOnline's bullet point summary structure.

However, there was a challenge. Analysing an algorithm’s sophistication proves problematic with the Mail’s renowned sensationalist headlines.

The DeepMinders found when using options, “a) The hi-tech bra that helps you beat breast X; b) Could Saccharin help beat X ?; c) Can fish oils help fight prostate X ?,” the model could easily predict that X = cancer, “regardless of the contents of the context document, simply because this is a very frequently cured entity in the Daily Mail corpus,” the paper stated.

DeepMind has also seen breakthroughs like training machines how to play Atari video games and online poker. The companies current goal is to create one general deep learning model that can improve services for its parent company, Google.

The Silicon Valley giant has heavily invested in deep learning. It’s main business driver, search, is based on this technology and other services like Google Translate, voice and mobile search as well as its Google Photos app are based on neural networks. Aside from DeepMind, it has teams of organically grown machine learning experts, led by AI specialistJeff Dean and British cognitive psychologist and computer scientist Geoff Hinton.

Relying on AI has its issues though. Google has suffered several embarassments at the hands of its algorithms, including tagging a black woman as a gorilla on Google Photos.

Why the surge?

Deep learning is at an early stage, yet it forms the basis of some of the largest, most profitable tech companies today. Services based on machine learning is what Google sells its advertising on, and competitors Microsoft, Apple and Facebook are rapidly making developments in the field.

One accelerator is the development of tools like Graphic Processor Units, or GPUs that cut machine training time.

“Stuff that would have taken a week to run now takes a few hours on a single machine,” says Dr Matthew Aylett, who works at Edinburgh University’s school for informatics and is Chief Science Officer of Edinburgh based text to speech company Cereproc.

Following an experiment byreserachers a few years ago, the use of GPUs to power deep neural networks “caught on like wildfire,” adds Will Ramey, GPU manufacturer and NVIDIA product manager, following an experiment by Canadian scientists.

NVIDIA GPU-powered deep learning powers many consumer products and services both on and offline. These include facial recognition technology for Facebook users, image identification in Google Photos and for speed sign reading and vehicle detection technology within Audi’s driverless cars. Baidu, China’s Google, is developing an AI-based health service, so users can tell their devices their symptoms and receive a diagnosis, and undoubtedly some well targeted pharmaceutical advertising. Further, diabetes patients can expect to see technology that can detect early stages of blindness thanks to machine learning research by Deepsense.io competiton entrants.

How much is it like a human brain?

Neural networks are inspired by the way human brains works, but very loosely.

“They are a bit like brains in the same way pulleys and strings are like muscles. There is a relationship but you wouldn’t say it is the same thing,” Dr Aylett explains. Much like big data tools, deep learning models are as good as the data you feed it, it will not search for information the way a child could.

Will it continue to progress?

Theoretically the tools are in place, but it is the supporting infrastructure like super-fast connection speeds, data availability and storage and powerful, fast computers that will need to keep up. Corporations financial backing is another added incentive.

Key terms for deep learning

Deep neural network: A deep neural network is the development of the first theory of machine learning. It was developed following the early Perceptron learning algorithm, which was limited in its ability to understand the ambiguity of “or” within natural language. To resolve this problem, highlighted by early AI heavyweight Marvin Minsky, several layers of learning algorithms needed to be developed.

Unsupervised versus supervised learning: Supervised neural networks must have been told the answer to your question at some point, for it to learn it. This might be used for object recognition - to tell a face from a car, for example. Unsupervised learning involves throwing information at a machine and hope it will learn something you haven’t trained it for, because it is able to cluster data to understand patterns. In object recognition this means it will group shapes together and conclude that they are similar. But it is often used in chess or online gaming AI.

GPUs: Graphic Processor Units were created by NVIDIA in the early 1990s and can be found in cars, powering video games, in home entertainment systems and tablets.

Linear regression: Computers track the line between two related variables, like lung cancer and smoking. Deep neural networks can process non linear multiple progression which effectively predicts unknown variables if you have enough data to train it.

Speech recognition: When you play audio or speak and a computer tells you what you said, or types it.

Speech synthesis: When you type in text and then it speaks, like Siri or OK Google.

Wednesday, October 14, 2015

Monday, October 12, 2015

Saturday, October 10, 2015

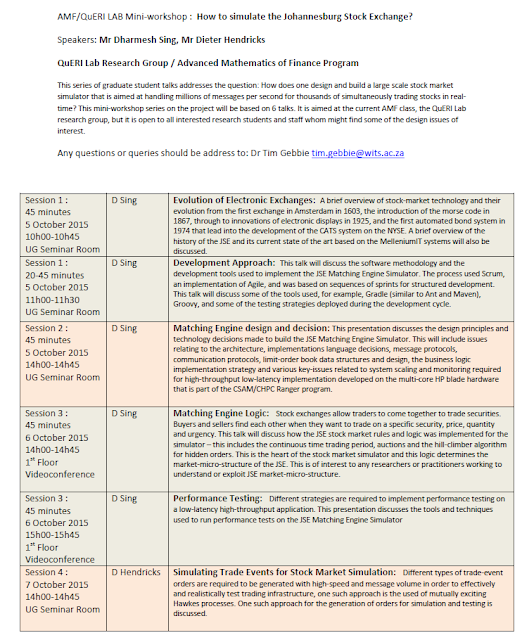

AMF/QuERI Lab Mini-workshop: How to simulate the Johannesburg Stock Exchange?

Thank you to Dharmesh and Dieter for making advanced concepts more readily accessible this week during the mini-workshop on how to design, develop and test a high-throughput low-latency simulator of the JSE matching engine, market and data gateways based on the JSE documentation. This was a wonderful journey from the memory management issues of L1 and L2 Caches to the inner working of the HPPC java libraries across to Hawkes processes!

Thursday, October 1, 2015

CoE-MaSS seminars

Piketty - livestreaming to Wits on 1 Oct:

http://www.wits.ac.za/newsroom/newsitems/201509/27386/news_item_27386.html

upcoming weekly seminar:

Sunday, September 20, 2015

Friday, September 11, 2015

Papers for Aug 2015

Preprint - a collaborative output from the Fields Institute

Congrats Dieter!

http://arxiv.org/ftp/arxiv/papers/1509/1509.02900.pdf

And featuring pictures which paint a thousand words:

Thursday, September 10, 2015

Cool BEC Colloquium at Berkeley

On the colloquium presented by Kay Kirkpatrick on 10 Sept

The discussion of Bose-Einstein condensation (BEC) and nonlinear Schrödinger equations (NLS) by Kay Kirkpatrick for the 1st colloquium of the 2015/2016 academic year at Berkeley today was excellent. For a non-physicist, she teased out the issues for solutions of the NLS, describing the tension between the Laplacian and non-linear terms, to review recent results on mean field limits and local interactions at very low temperatures. The talk for a general audience included a tight summary of the Hilbert space foundations and notation. Not only did she do the bosenova, but also went some way to addressing Hilbert's 6th problem. The description of that cold phenomena was cool.

Here's a link to the blog by T.Tao on a related talk by Natasa Pavlovic, who was also in the audience. Pavolovic gave a more intensive and research focussed talk at the MSRI last week. T.Tao's blog includes short reviews of classical and quantum mechanics and the NLS: https://terrytao.wordpress.com/2009/11/26/from-bose-einstein-condensates-to-the-nonlinear-schrodinger-equation/

Here's a physics magazine article on the bosenova: http://physicsworld.com/cws/article/news/2008/aug/27/cold-atoms-explode-like-cloverleafs

------

DLW

visitor (as non-member) to MSRI, Aug-Sept 2015

See also http://dianewilcox.blogspot.com/2015/08/mindscapes.html

visitor (as non-member) to MSRI, Aug-Sept 2015

See also http://dianewilcox.blogspot.com/2015/08/mindscapes.html

Saturday, August 15, 2015

Congratulations to our data curators

Congratulations to our data curators: Tim for leading the process and especially Dieter, Fayyaaz and Mike for all the work done towards setting up the data infrastructure for our projects. With 100s of person-hours clocked up, we appreciate that there is much in the details for seamless access.

And thank you for the ongoing fine-tuning and maintenance. Now for some analysis...

THANK YOU from the rest of the team !!

And thank you for the ongoing fine-tuning and maintenance. Now for some analysis...

|

| [image source: internet] |

Subscribe to:

Comments (Atom)